Saturday, February 29, 2020

Miss Jameson and her Optophone

Earlier I had wondered why chordal technology wasn't more widespread. It seemed especially appropriate as an audio aid for the blind.

In fact a chordal machine was developed, but it couldn't compete at the time. Later on it helped to spur development of optical character recognition.

The interval between at the time and later on was 50 years, and it was bridged by one brave and patient woman.

So here's a tremendous heartfelt salute to Mary Jameson, who kept an old technology up and running for 50 years until circuitry and computing power were ready to redo the idea.

= = = = =

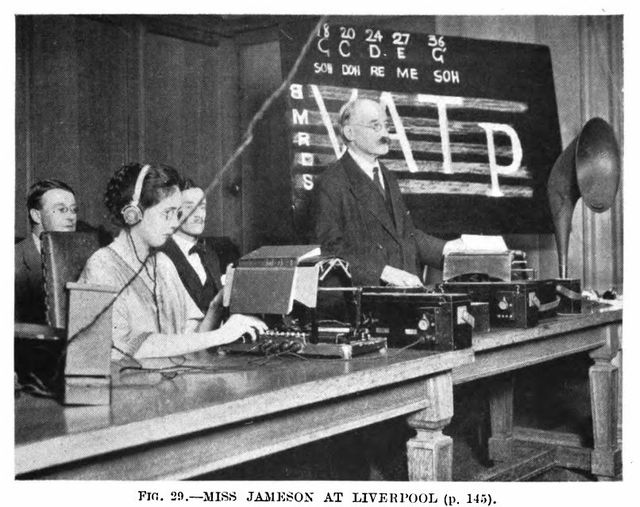

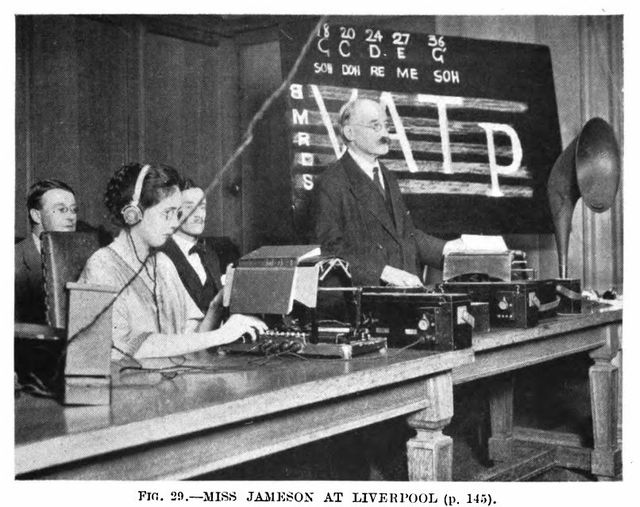

As soon as selenium cells were developed, inventors tried to use them to help blind people read and see pictures. The most successful effort was the Optophone, by Edward d'Albe. In 1917 he connected up with Mary Jameson, an expert Braille reader.

Miss Jameson turned out to have a unique talent and passion for the Optophone. She traveled to schools and conventions and gave demonstrations, and evangelized for it constantly.

From the d'Albe book:

Miss Jameson turned out to have a unique talent and passion for the Optophone. She traveled to schools and conventions and gave demonstrations, and evangelized for it constantly.

From the d'Albe book:

The Optophone was about the size of a typewriter, and the action was similar to a typewriter's carriage return. It was powered by a battery (not included) and the output went to headphones. A book was draped over the top, and Miss Jameson scanned each line with the tracker-bar. The tracker had a governor in the reading direction and free-wheeled in the other direction, to maintain a steady speed while actually scanning.

The Optophone was about the size of a typewriter, and the action was similar to a typewriter's carriage return. It was powered by a battery (not included) and the output went to headphones. A book was draped over the top, and Miss Jameson scanned each line with the tracker-bar. The tracker had a governor in the reading direction and free-wheeled in the other direction, to maintain a steady speed while actually scanning.

Taking off the book and leaving just one page, transparent for clarity:

Taking off the book and leaving just one page, transparent for clarity:

The heart of the machine is the Tracker, the pear-shaped thing. Inside the tracker is the Tone Disc, which has 5 circles of holes at different rates. The Tone Disc constantly rotates like a siren for light.

The heart of the machine is the Tracker, the pear-shaped thing. Inside the tracker is the Tone Disc, which has 5 circles of holes at different rates. The Tone Disc constantly rotates like a siren for light.

A light beam comes from a bulb mounted under the Tone Disc. The beam passes through the tone disc, interrupted by all five circles at once. I've shown the five frequencies as different colors here so you can see how the five frequencies hit the text. The outermost circle (red) is the highest frequency, and the outermost circle hits ascenders and UC letters. The innermost circle (blue) is lowest freq, and it hits descenders. The three middle freqs hit the main body of the letters.

A light beam comes from a bulb mounted under the Tone Disc. The beam passes through the tone disc, interrupted by all five circles at once. I've shown the five frequencies as different colors here so you can see how the five frequencies hit the text. The outermost circle (red) is the highest frequency, and the outermost circle hits ascenders and UC letters. The innermost circle (blue) is lowest freq, and it hits descenders. The three middle freqs hit the main body of the letters.

A selenium cell mounted beside the top end of the Tracker picks up the sum of the interrupted lights after they have bounced off the text itself. Selenium changes its resistance in proportion to light. The simple circuitry uses this change to give more sound when each frequency hits black, and less sound when each frequency hits white.

A selenium cell mounted beside the top end of the Tracker picks up the sum of the interrupted lights after they have bounced off the text itself. Selenium changes its resistance in proportion to light. The simple circuitry uses this change to give more sound when each frequency hits black, and less sound when each frequency hits white.

The result is a constantly changing chord, similar in flavor to the changing formants of speech.

= = = = =

I rigged up a Python program to replicate the original in digital form. It sounds somewhat different from the Mauch version in the 1971 tape. It also replicates the basic failing of the original, in that the display is damned hard to sync up with the lines on the page.

My program doesn't like the two-line sample I used for the visual demo, so I tried a longer three-line sample with more satisfying results.

The three-line sample:

The result is a constantly changing chord, similar in flavor to the changing formants of speech.

= = = = =

I rigged up a Python program to replicate the original in digital form. It sounds somewhat different from the Mauch version in the 1971 tape. It also replicates the basic failing of the original, in that the display is damned hard to sync up with the lines on the page.

My program doesn't like the two-line sample I used for the visual demo, so I tried a longer three-line sample with more satisfying results.

The three-line sample:

Here's the sound in MP3.

And here's the text lined up with the waveform envelope as seen in Audacity:

Here's the sound in MP3.

And here's the text lined up with the waveform envelope as seen in Audacity:

You can almost see the letters in the waveform, especially in the third line where the program got sync'd up better.

= = = = =

I've placed a ZIP of the Poser model and the python program here, for the sake of 'scientific replicability'. The ZIP is a standard Poser runtime, and the model requires Poser. The python program to produce sound will run in a typical python install and doesn't use Poser. It does require a couple of modules (PIL, Wave) that may not be present in all versions.

You can almost see the letters in the waveform, especially in the third line where the program got sync'd up better.

= = = = =

I've placed a ZIP of the Poser model and the python program here, for the sake of 'scientific replicability'. The ZIP is a standard Poser runtime, and the model requires Poser. The python program to produce sound will run in a typical python install and doesn't use Poser. It does require a couple of modules (PIL, Wave) that may not be present in all versions.

Miss Jameson turned out to have a unique talent and passion for the Optophone. She traveled to schools and conventions and gave demonstrations, and evangelized for it constantly.

From the d'Albe book:

Miss Jameson turned out to have a unique talent and passion for the Optophone. She traveled to schools and conventions and gave demonstrations, and evangelized for it constantly.

From the d'Albe book:

This was the optophone — risen like a phoenix from its ashes — which came out into the world once more on August 27, 1918, at the British Scientific Products Exhibition held at King's College, London. The Author gave a lecture at which Sir Richard Gregory, F.R.A.S., Editor of Nature, presided. At the conclusion of the lecture Miss Jameson gave a test reading from Dante's Inferno. A page was chosen by the audience. Miss Jameson put the book on the bookrest of the optophone and began to read. The words she read were: "in the light." "Is that all?" said the Chairman. "Yes," said the blind reader. "There are only three words in this line, with a full stop after them." It turned out to be quite correct, and the words read seemed to many of those present to be very appropriate to the occasion.Despite Miss Jameson's evangelism, the Optophone didn't achieve wide acceptance. Her talent was apparently an outlier; other blind people couldn't make the machine work properly. It was fussy about type faces and sizes, and pretty much required one standard size of line. Reprinting books for the Optophone would have been much less expensive than Brailling them, but the distinction didn't matter. If you're going to reprint for a fussy machine that nobody uses, you might as well reprint for unfussy Braille that everybody uses. In 1970 the idea was picked up by Mauch Labs in Dayton, a prosthetics research facility with close ties to the Veterans Administration. Mauch thought the Optophone might be an easier path to reading for newly blinded Vietnam vets. Braille is like a separate language, easy to learn when young, not so easy for adults. Miss Jameson consulted with Mauch, helping them to get the feel of the concept. They expanded it in several directions, but again the results were disappointing. Other digital researchers picked up some of Mauch's extensions and ran with them, ultimately developing true OCR. This webpage gives a detailed account of the Mauch effort, and includes a brief 1971 tape of a Mauch researcher using one of their modernized variants. You quickly get a sense of the fussiness and slowness of the process, even with an expert user. = = = = = I've tried to animate the version that Miss Jameson used. Here's the setup.

The Optophone was about the size of a typewriter, and the action was similar to a typewriter's carriage return. It was powered by a battery (not included) and the output went to headphones. A book was draped over the top, and Miss Jameson scanned each line with the tracker-bar. The tracker had a governor in the reading direction and free-wheeled in the other direction, to maintain a steady speed while actually scanning.

The Optophone was about the size of a typewriter, and the action was similar to a typewriter's carriage return. It was powered by a battery (not included) and the output went to headphones. A book was draped over the top, and Miss Jameson scanned each line with the tracker-bar. The tracker had a governor in the reading direction and free-wheeled in the other direction, to maintain a steady speed while actually scanning.

Taking off the book and leaving just one page, transparent for clarity:

Taking off the book and leaving just one page, transparent for clarity:

The heart of the machine is the Tracker, the pear-shaped thing. Inside the tracker is the Tone Disc, which has 5 circles of holes at different rates. The Tone Disc constantly rotates like a siren for light.

The heart of the machine is the Tracker, the pear-shaped thing. Inside the tracker is the Tone Disc, which has 5 circles of holes at different rates. The Tone Disc constantly rotates like a siren for light.

A light beam comes from a bulb mounted under the Tone Disc. The beam passes through the tone disc, interrupted by all five circles at once. I've shown the five frequencies as different colors here so you can see how the five frequencies hit the text. The outermost circle (red) is the highest frequency, and the outermost circle hits ascenders and UC letters. The innermost circle (blue) is lowest freq, and it hits descenders. The three middle freqs hit the main body of the letters.

A light beam comes from a bulb mounted under the Tone Disc. The beam passes through the tone disc, interrupted by all five circles at once. I've shown the five frequencies as different colors here so you can see how the five frequencies hit the text. The outermost circle (red) is the highest frequency, and the outermost circle hits ascenders and UC letters. The innermost circle (blue) is lowest freq, and it hits descenders. The three middle freqs hit the main body of the letters.

A selenium cell mounted beside the top end of the Tracker picks up the sum of the interrupted lights after they have bounced off the text itself. Selenium changes its resistance in proportion to light. The simple circuitry uses this change to give more sound when each frequency hits black, and less sound when each frequency hits white.

A selenium cell mounted beside the top end of the Tracker picks up the sum of the interrupted lights after they have bounced off the text itself. Selenium changes its resistance in proportion to light. The simple circuitry uses this change to give more sound when each frequency hits black, and less sound when each frequency hits white.

The result is a constantly changing chord, similar in flavor to the changing formants of speech.

= = = = =

I rigged up a Python program to replicate the original in digital form. It sounds somewhat different from the Mauch version in the 1971 tape. It also replicates the basic failing of the original, in that the display is damned hard to sync up with the lines on the page.

My program doesn't like the two-line sample I used for the visual demo, so I tried a longer three-line sample with more satisfying results.

The three-line sample:

The result is a constantly changing chord, similar in flavor to the changing formants of speech.

= = = = =

I rigged up a Python program to replicate the original in digital form. It sounds somewhat different from the Mauch version in the 1971 tape. It also replicates the basic failing of the original, in that the display is damned hard to sync up with the lines on the page.

My program doesn't like the two-line sample I used for the visual demo, so I tried a longer three-line sample with more satisfying results.

The three-line sample:

Here's the sound in MP3.

And here's the text lined up with the waveform envelope as seen in Audacity:

Here's the sound in MP3.

And here's the text lined up with the waveform envelope as seen in Audacity:

You can almost see the letters in the waveform, especially in the third line where the program got sync'd up better.

= = = = =

I've placed a ZIP of the Poser model and the python program here, for the sake of 'scientific replicability'. The ZIP is a standard Poser runtime, and the model requires Poser. The python program to produce sound will run in a typical python install and doesn't use Poser. It does require a couple of modules (PIL, Wave) that may not be present in all versions.

You can almost see the letters in the waveform, especially in the third line where the program got sync'd up better.

= = = = =

I've placed a ZIP of the Poser model and the python program here, for the sake of 'scientific replicability'. The ZIP is a standard Poser runtime, and the model requires Poser. The python program to produce sound will run in a typical python install and doesn't use Poser. It does require a couple of modules (PIL, Wave) that may not be present in all versions.

Labels: Patient people, Patient things, Real World Math