Thursday, September 30, 2021

Mathematicians don't learn or teach

I like to salute and thank the people who do the hard work of real archiving. Preserving the past is the MOST IMPORTANT duty of scholars in a time of Room 101 and Github.

Bitsavers is a comparatively small but excellent archive of documents from the early years of computing, from 1950 to 1990. As I model the 1957 IBM RAMAC system, I've relied heavily on Bitsavers.

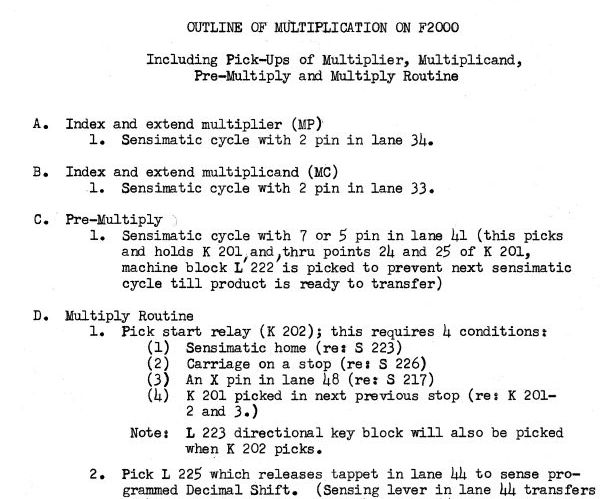

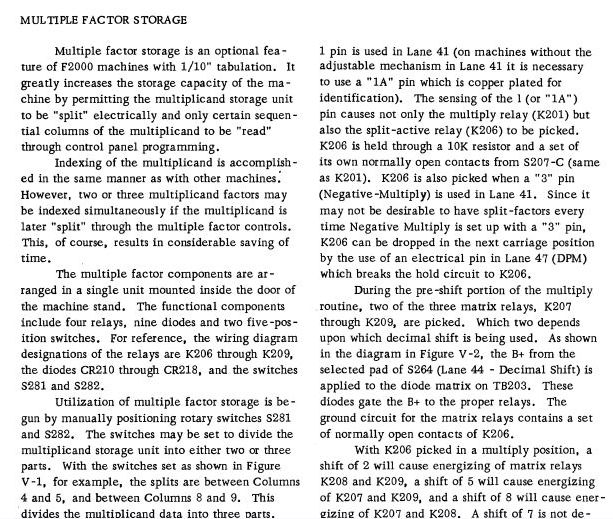

Most of these manuals were printed in offset form, using regular typewriters or Varitypers to lay out the pages. IBM had the budget for proper linotype and hot-lead printing, but most of the upstart companies and academic writers didn't.

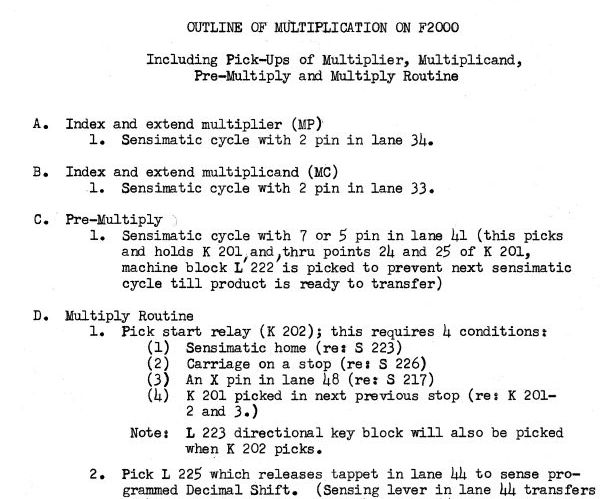

From a 1962 Burroughs manual, a mix of typewriter

and Varityper.

and Varityper.

These instructions are hugely complex and detailed, but everything is in normal English, so you can read it unambiguously.

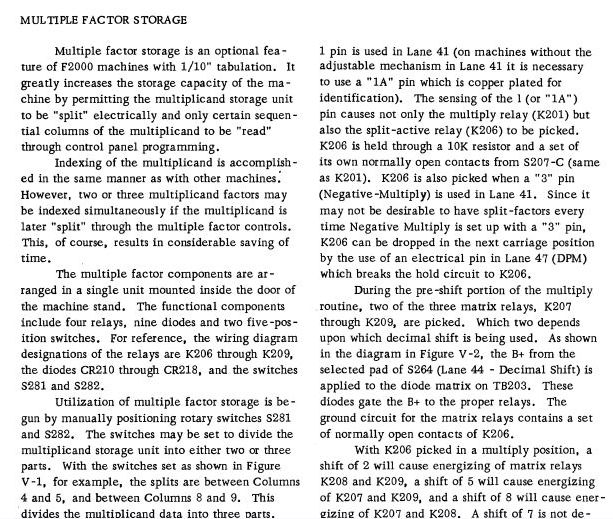

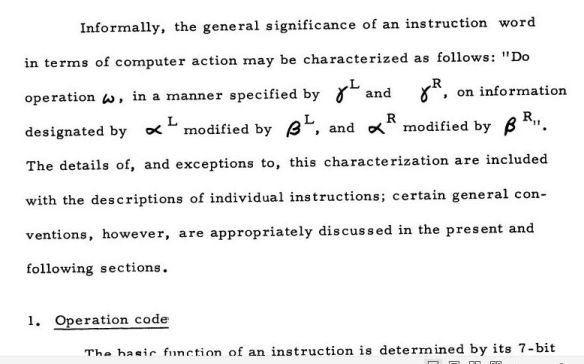

When mathematicians wrote manuals, they continued their blackboard habits of mixing Greek and Hebrew and Roman and Fraktur and made-up symbols, scrawled loosely and sloppily.

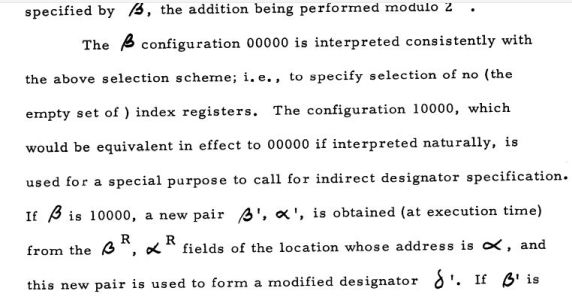

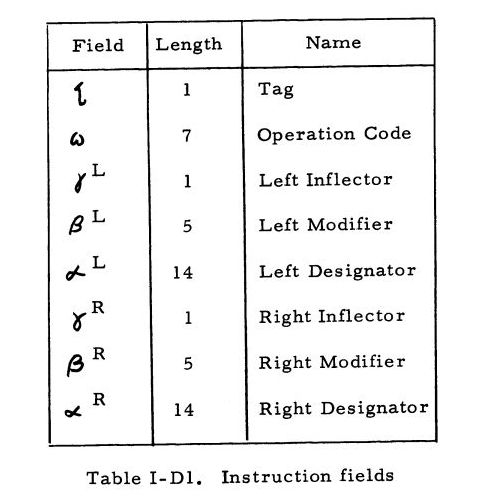

From a MANIAC III manual written at Univ of Chicago in 1962:

These instructions are hugely complex and detailed, but everything is in normal English, so you can read it unambiguously.

When mathematicians wrote manuals, they continued their blackboard habits of mixing Greek and Hebrew and Roman and Fraktur and made-up symbols, scrawled loosely and sloppily.

From a MANIAC III manual written at Univ of Chicago in 1962:

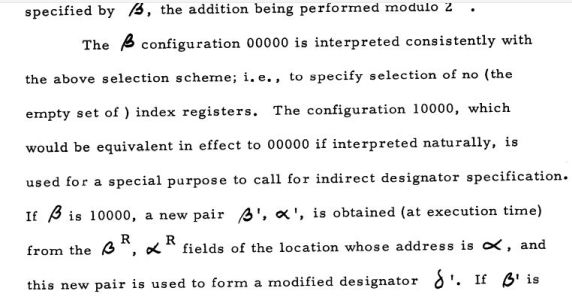

Can you tell the difference between the alpha and gamma? And what is that symbol for Tag? Is that a tau or a iota with an acute accent over it?

Can you tell the difference between the alpha and gamma? And what is that symbol for Tag? Is that a tau or a iota with an acute accent over it?

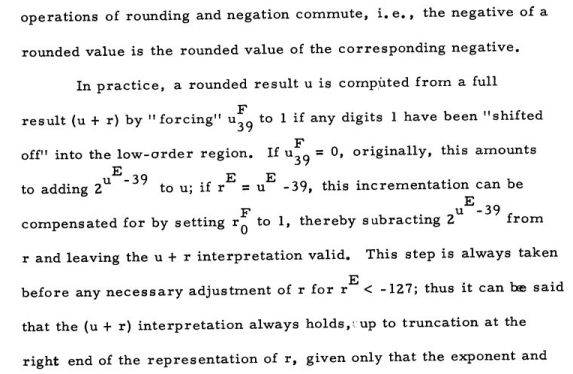

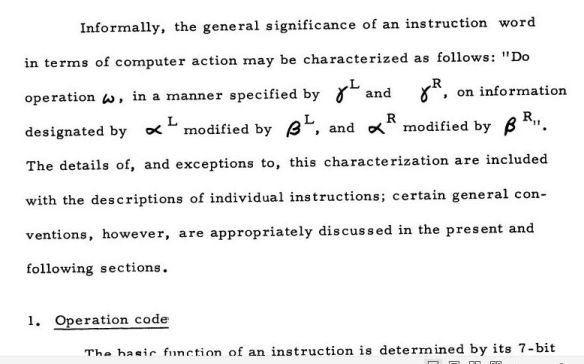

Note the beta superscripted by a capital R which is subscripted by 11, or is it [1 sub 1] or [1 in the first category and 1 in the second category]? No, it's not any of those things. It's the fucking RIGHT QUOTATION MARK for the sentence.

More to the point, they had just defined specific WORDS for their concepts. Operation, tag, information, left designator, right inflector. THEY DIDN'T NEED THE GREEK. The entire discussion would have been clearer using the ACTUAL FUCKING WORDS.

Do an Operation, in a manner specified by the left and right Inflectors, on information designated by left Designators and modified by left Modifiers; and on information designated by right Designators and modified by right Modifiers.

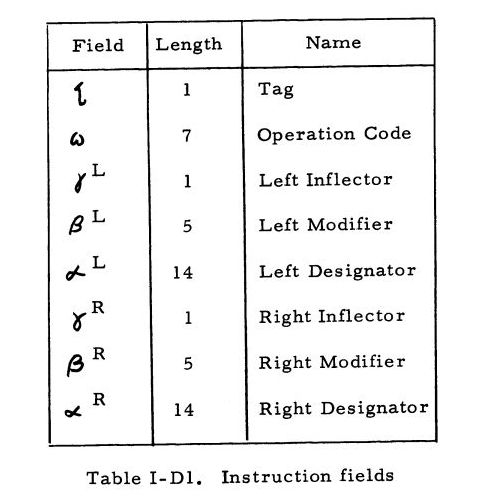

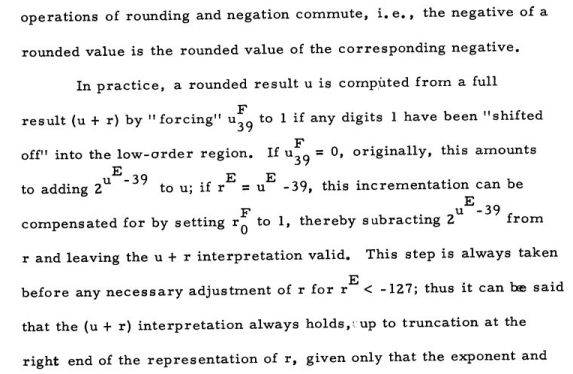

Even when they accidentally used only Roman letters, the blackboard habit of supersubsuperscripting continued.

Note the beta superscripted by a capital R which is subscripted by 11, or is it [1 sub 1] or [1 in the first category and 1 in the second category]? No, it's not any of those things. It's the fucking RIGHT QUOTATION MARK for the sentence.

More to the point, they had just defined specific WORDS for their concepts. Operation, tag, information, left designator, right inflector. THEY DIDN'T NEED THE GREEK. The entire discussion would have been clearer using the ACTUAL FUCKING WORDS.

Do an Operation, in a manner specified by the left and right Inflectors, on information designated by left Designators and modified by left Modifiers; and on information designated by right Designators and modified by right Modifiers.

Even when they accidentally used only Roman letters, the blackboard habit of supersubsuperscripting continued.

The limited character set on keypunch keyboards FORCED programmers to talk English.

The limited character set on keypunch keyboards FORCED programmers to talk English.

No Greek, no math symbols, no subs and supers. Just uppercase Roman, numbers, and a few bookkeeping symbols like dollars and percent. COBOL was written on and for keypunches, and it originally had no symbols at all.

DIVIDE NUMA BY NUMB GIVING RES-DIV.

Naming variables in English was the biggest contribution of programming.

Later keyboards and languages made longer names possible, and introduced more symbols, but never dropped to the level of mathematicians.

Those academics at Chicago had been using computers and keypunches for several years, and still hadn't learned English. Modern mathematicians still insist on a tangled jumble of Greek, Hebrew, supersubsupersubscripts, and an occasional SINGLE letter of English. Never never never allow a word to contaminate the purity of obfuscation.

No Greek, no math symbols, no subs and supers. Just uppercase Roman, numbers, and a few bookkeeping symbols like dollars and percent. COBOL was written on and for keypunches, and it originally had no symbols at all.

DIVIDE NUMA BY NUMB GIVING RES-DIV.

Naming variables in English was the biggest contribution of programming.

Later keyboards and languages made longer names possible, and introduced more symbols, but never dropped to the level of mathematicians.

Those academics at Chicago had been using computers and keypunches for several years, and still hadn't learned English. Modern mathematicians still insist on a tangled jumble of Greek, Hebrew, supersubsupersubscripts, and an occasional SINGLE letter of English. Never never never allow a word to contaminate the purity of obfuscation.

and Varityper.

and Varityper.

These instructions are hugely complex and detailed, but everything is in normal English, so you can read it unambiguously.

When mathematicians wrote manuals, they continued their blackboard habits of mixing Greek and Hebrew and Roman and Fraktur and made-up symbols, scrawled loosely and sloppily.

From a MANIAC III manual written at Univ of Chicago in 1962:

These instructions are hugely complex and detailed, but everything is in normal English, so you can read it unambiguously.

When mathematicians wrote manuals, they continued their blackboard habits of mixing Greek and Hebrew and Roman and Fraktur and made-up symbols, scrawled loosely and sloppily.

From a MANIAC III manual written at Univ of Chicago in 1962:

Can you tell the difference between the alpha and gamma? And what is that symbol for Tag? Is that a tau or a iota with an acute accent over it?

Can you tell the difference between the alpha and gamma? And what is that symbol for Tag? Is that a tau or a iota with an acute accent over it?

Note the beta superscripted by a capital R which is subscripted by 11, or is it [1 sub 1] or [1 in the first category and 1 in the second category]? No, it's not any of those things. It's the fucking RIGHT QUOTATION MARK for the sentence.

More to the point, they had just defined specific WORDS for their concepts. Operation, tag, information, left designator, right inflector. THEY DIDN'T NEED THE GREEK. The entire discussion would have been clearer using the ACTUAL FUCKING WORDS.

Do an Operation, in a manner specified by the left and right Inflectors, on information designated by left Designators and modified by left Modifiers; and on information designated by right Designators and modified by right Modifiers.

Even when they accidentally used only Roman letters, the blackboard habit of supersubsuperscripting continued.

Note the beta superscripted by a capital R which is subscripted by 11, or is it [1 sub 1] or [1 in the first category and 1 in the second category]? No, it's not any of those things. It's the fucking RIGHT QUOTATION MARK for the sentence.

More to the point, they had just defined specific WORDS for their concepts. Operation, tag, information, left designator, right inflector. THEY DIDN'T NEED THE GREEK. The entire discussion would have been clearer using the ACTUAL FUCKING WORDS.

Do an Operation, in a manner specified by the left and right Inflectors, on information designated by left Designators and modified by left Modifiers; and on information designated by right Designators and modified by right Modifiers.

Even when they accidentally used only Roman letters, the blackboard habit of supersubsuperscripting continued.

The limited character set on keypunch keyboards FORCED programmers to talk English.

The limited character set on keypunch keyboards FORCED programmers to talk English.

No Greek, no math symbols, no subs and supers. Just uppercase Roman, numbers, and a few bookkeeping symbols like dollars and percent. COBOL was written on and for keypunches, and it originally had no symbols at all.

DIVIDE NUMA BY NUMB GIVING RES-DIV.

Naming variables in English was the biggest contribution of programming.

Later keyboards and languages made longer names possible, and introduced more symbols, but never dropped to the level of mathematicians.

Those academics at Chicago had been using computers and keypunches for several years, and still hadn't learned English. Modern mathematicians still insist on a tangled jumble of Greek, Hebrew, supersubsupersubscripts, and an occasional SINGLE letter of English. Never never never allow a word to contaminate the purity of obfuscation.

No Greek, no math symbols, no subs and supers. Just uppercase Roman, numbers, and a few bookkeeping symbols like dollars and percent. COBOL was written on and for keypunches, and it originally had no symbols at all.

DIVIDE NUMA BY NUMB GIVING RES-DIV.

Naming variables in English was the biggest contribution of programming.

Later keyboards and languages made longer names possible, and introduced more symbols, but never dropped to the level of mathematicians.

Those academics at Chicago had been using computers and keypunches for several years, and still hadn't learned English. Modern mathematicians still insist on a tangled jumble of Greek, Hebrew, supersubsupersubscripts, and an occasional SINGLE letter of English. Never never never allow a word to contaminate the purity of obfuscation.Labels: Real World Math, storage